- By: Admin

- June 23, 2023

- 2008 views

ChatGPT is the latest generative AI tool, renowned for public accessibility, although not great functionality. While an algorithm can produce output, generative AI models are considered as one of them that produce aesthetically pleasing images, texts, audio outputs, and AI solutions. They are differently goal-oriented than traditional AI models, which often try to predict a specific number or from a set of options.

ChatGPT has increasing popularity besides its growing risks ranging from ethical to commercial. It has a great potential to disrupt many industries, possessing a responsible development if properly utilized. It is the fastest-growing consumer application crossing a million users.

Keep reading to learn about the global change in the commercial industry, the proposal of the European Commission, and the AI act that has taken place.

How Italy's Ban Impacted The Adoption Of ChatGPT

Concerns about AI tools such as OpenAI’s ChatGPT are turning into a change at the national level as governments form their new regulations and policies for the technology.

Italy’s ban was about something other than adopting ChatGPT’s technology but needing compliance with the GDPR. Lenin mentioned his concern about how other Governments would regulate AI tools. Regulators would adopt the technique of GDPR by handing over the duties to businesses that have chosen tools such as ChatGPT; that is why he told business leaders must prioritize future regulation of large language models.

The Italian Government banned ChatGPT in early April after putting forward concerns regarding data privacy and national security after announcing the removal of the temporary ban. Later, the French Government started assessing the tool, and the European data protection board created a task force solely to focus on the technology and its AI privacy rules.

The White House demands more information regarding AI risks in the United States. The country is yet to advance the rules and regulations related to AI. Davidson stated that responsible AI could bring numerous benefits, but only when potential harms and consequences are addressed.

Regulators May Face Odds For AI Rules

There are many other concerns besides Data privacy rules, said Herman. A few concerns include collecting the amount of data by large language models to design powerful machine learning models, which could include protected data like copyrighted material. Another issue is the potential layoffs of jobs, social structure, and the economy due to emerging technology.

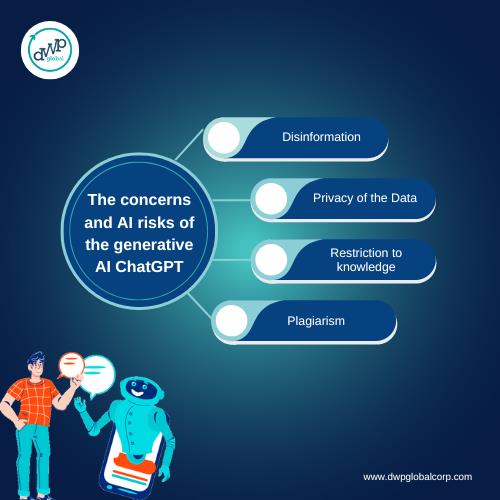

Let’s discuss the concerns and AI risks of the generative AI ChatGPT.

Disinformation:

Generative AI technology has a high risk of misinformation or deception of identity, as it can produce subjects that closely resonate with the authored ones.

Privacy of the data:

There is always a risk of information shared by a user with others without consent for potential misuse, especially in industries like healthcare.

Restriction to knowledge:

ChatGPT has access to large amounts of data, which can be modified with new features and improvised. Although, presently, it is limited to 2021 data and does not possess any latest updates.

Plagiarism:

One of the survey results declares that 89% of students from the US use ChatGPT for AI solutions to complete their assignments, creating a hazard for the country’s educational system.

Herman cautioned the regulators, saying holding accountable businesses if such technologies cause harm should be considered; inculcating trust into such systems is more important than regulations. The Center for Data Innovation voiced that the increasing alarm of AI Systems intimidates the US’s innovative approach towards the digital economy.

OpenAI has responded to many concerns about ChatGPT, including data privacy and risk management. They have spent six months assessing the GPT-4 to understand its benefits and risks better, mentioning the need to spend more time improvising the AI system’s safety.

The Increasing Evolution Of AI Systems Is Leading To AI Regulation.

Over the last few months, lawmakers and regulators across the globe made one thing clear that new regulations would soon take the shape of how companies use Artificial Intelligence for AI solutions.

In March, the largest five Federal Financial regulators in the US produced a request for information on how banks use artificial intelligence, indicating that new guidelines are coming up for the financial sector.

After a few weeks, the United States Federal Trade Commission released guidelines defining the illegal use of AI, stating that it could cause more harm than good. On April 21, the European Commission released its proposal for regulating artificial intelligence technology, which includes fines up to 6% of the company’s revenue.

Consumer Data Protection Act in Virginia is an AI act that requires evaluation for types of high-risk algorithms. In the European Union, the GDPR requires a comparable impact assessment of high risks for personal data processing.

Inevitably, impact assessments form the main part of the AI regulation proposed by the European Union, which includes eight papers of technical documentation about high-risk AI systems and a design of a risk management plan for addressing them. The European Union proposal should be made aware to US lawmakers, which aligns with the impact evaluation required in a bill called the “Algorithmic Accountability Act” proposed in 2019.

The next concern is independence and accountability, which at a large scale requires testing of AI systems to assess their risks and evaluation of AI by lawyers and data scientists having different incentives than frontline data scientists. It means testing and validating AI by different technical users than those who developed it.

Lawmakers and regulators state that the management of risk is a continuous process. In the EU proposal of eight papers on technical documentation, a whole section is dedicated to defining the requirement to assess the performance of AI systems in the post-market phase. Although, the specific requirements for assessing the impacts vary across these frameworks.

Decision-makers and policymakers have yet to ensure that AI development can govern effectively globally.

Business Risks Of Generative AI

Business application of generative AI covers the major portion of the risk. A lot of companies prefer to adopt generative AI that is far more general than only generating content. Generative artificial intelligence technologies are large and powerful and may be particularly great at generating images or texts.

The main concern about collaborative deployment using ChatGPT is that the Software Development company may need to understand the final AI systems’ functionality sufficiently. The original developer played a big role in developing the AI model but failed to extend usage, which was adopted for another purpose.

Although these trends of generative artificial intelligence technologies are used for impactful development of educational access, financial service access, health care, or hiring, they should be consciously scrutinized by lawmakers for decision-making. The stakes for people affected by such decisions could be high.

Implementations like “KeeperTax,” an OpenAI model that assesses the tax statements for finding the tax-deductible expenses, are increasing the stakes. DoNotPay is also included in the high-stakes category, a company that claims to offer generative legal advice featured on OpenAI models.

Our Software Development Company provides you with numerous services such as Cyber Security, Business Intelligence, Legal Modernization etc.

Conclusion

Given the ability of artificial intelligence technology, commercial industry, and governments are looking forward to invest billions of bucks in AI tools and technology. Only some countries are already evaluating the usage of AI in defense and security.

The approaching style of governments towards the problems of AI risks across the globe have similarities which means that the AI regulation have already become clear. So the companies choosing AI currently and those ensuring their existing AI have remained complaint.